How to design & evaluate user interfaces as well as interaction paradigms for accessing 2D & 3D datasets involving uncertainty?

This was the question which I addressed in my project stream within Virtual Interiors. My work involved designing, creating and evaluating a demonstrator for a 3D research environment, in a co-design setting with Chiara Piccoli and during embedded research at project partners Netherlands Institute for Sound & Vision and Brill Publishers.

This post summarizes the work pertaining the 3D research environment, points to additional resources and also looks ahead to future opportunities.

1. The challenging task of 3D interface design

It is not straightforward to create user interfaces for accessing 3D reconstructions in a historical setting. User interfaces can be seen as an “in-between device” between the historically interested user and the actual 3D reconstructions and underlying data (see e.g. [1]).

The interaction with 3D environment and assets is challenging in itself and navigation issues and disorientation are commonplace [2]. This affects the usability of a prospective interface [3]. In addition, the usability is influenced by the number and complexity of interface features: the paradox is that more features might make an interface more useful to a researcher, but also harder to use. Striking a balance between functionality and usability is therefore of key importance.

Achieving this balance is also more arduous because there is a lack of documentation of best practices for interface design in a 3D context. Hence, it is hard to determine which “standard” set of features is necessary for interacting with 3D historical content [4].

2. Exploring requirements

To shed more light on these issues, I spent the first months of my work as a postdoc in the Virtual Interiors project (2019) in exploring the requirements for a 3D research environment. This was done via an analysis of previous use cases for 3D, design thinking [5] sessions with the other project members. Some requirements for a 3D research environment that came up were the ability to view the 3D space from different perspectives, to interact with objects, to add analytical layers (uncertainty & lighting), to annotate and to view context information. Furthermore, a user study with digital humanists was conducted [6].

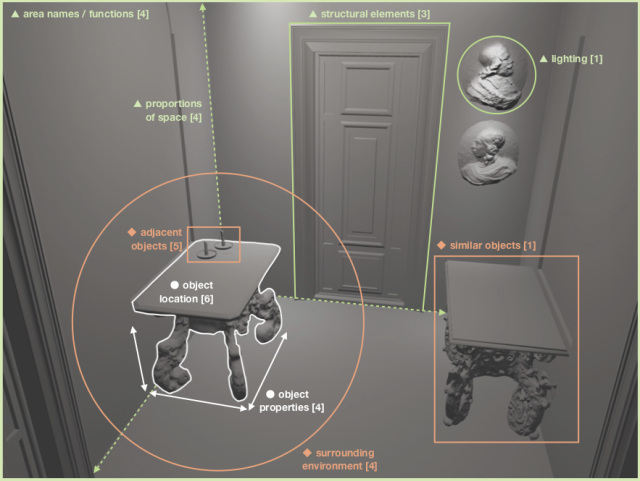

From this user study, it became apparent that historical and spatial context are of key importance for a 3D research environment. From a thematic analysis of session transcripts, we identified the spatial context dimensions object properties, object relationships, and perception of space, pictured in Figure 1.

(● object properties, ⬥ object relationships & ▲ perception of space)

More information:

📖 Hugo Huurdeman & Chiara Piccoli (2020), The Importance of Context in Search User Interfaces for 3D Content (Proceedings CHIIR 2020)

3. The Virtual Interiors 3D research environment

Based on the initial phase of analyzing requirements, a prototype research environment was designed and developed in an iterative way. The prototype addressed the requirements mentioned above. While a full discussion of its functionality, the rationale behind it, the technical structure and the reconstruction process is available elsewhere [16], I discuss some key features here.

Analytical & Experiential user interfaces

Historical 3D environments can be utilized by widely different audiences, ranging from the casually interested to topical experts. For these audiences, different user interface features can be worthwhile. Instead of creating one interface to cater for all of their needs, which may lead to to the aforementioned tensions in the balance between functionality and usability, I decided for a different approach.

To cater for these different audiences and their goals, we constructed a “multi-layer interface” [7], in which two distinct interfaces use the same underlying framework and data. On the one hand, there is an analytical interface layer, aimed at researchers, and on the other hand a more experiential interface layer, which can be used by both researchers and other historically interested individuals:

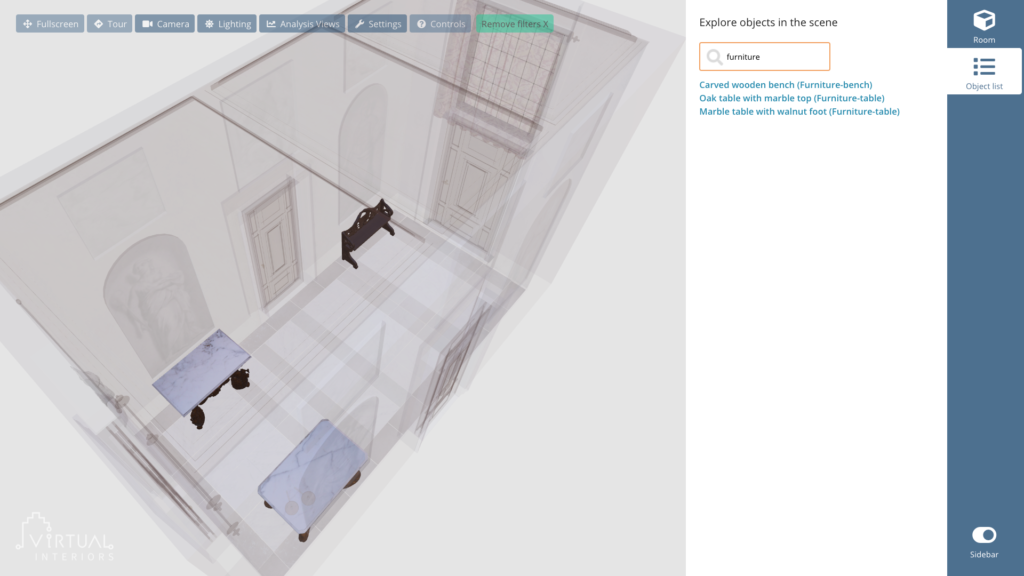

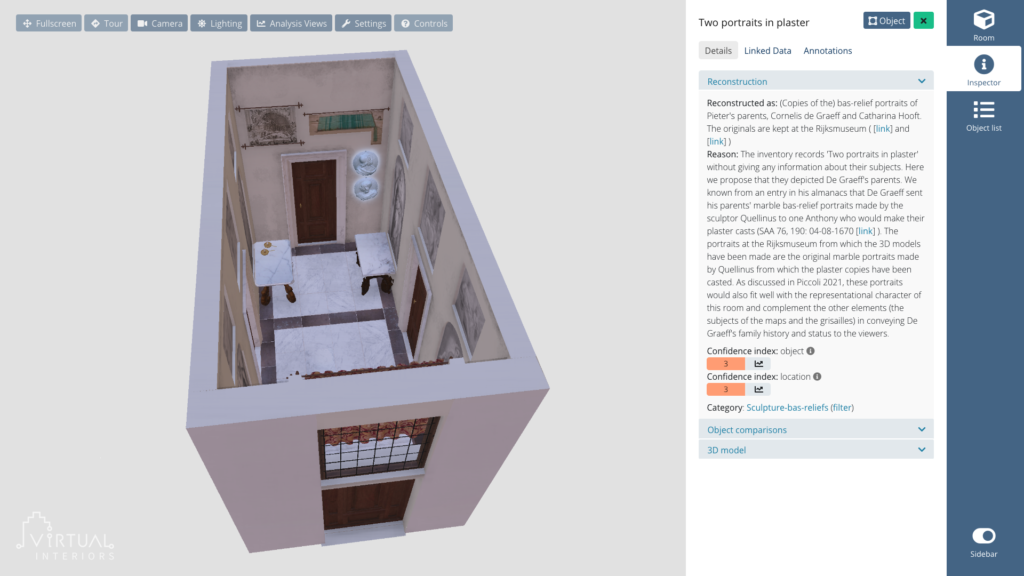

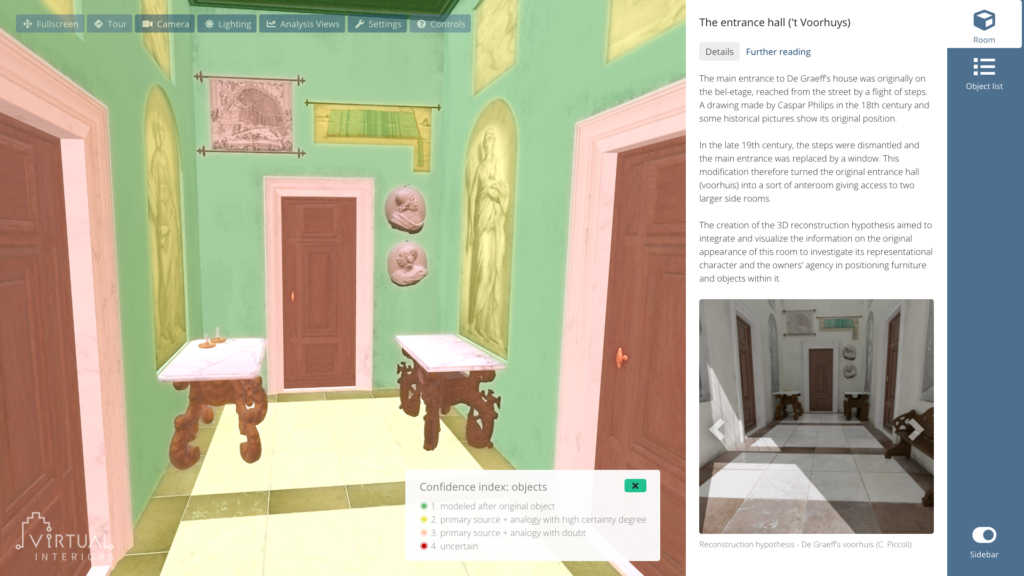

- The Analytical interface allows researchers to take a deep-dive into underlying research data about domestic interiors, view uncertainty overlays, explore associated archive documents and to follow leads to connected Linked Data for instance examining artist biographies, related artworks and various historical data sources. Moreover, it is possible to explore hypotheses related to object arrangement and lighting. These analytical interfaces are best experienced via desktop computers or tablets (see Figure 2A & 2B).

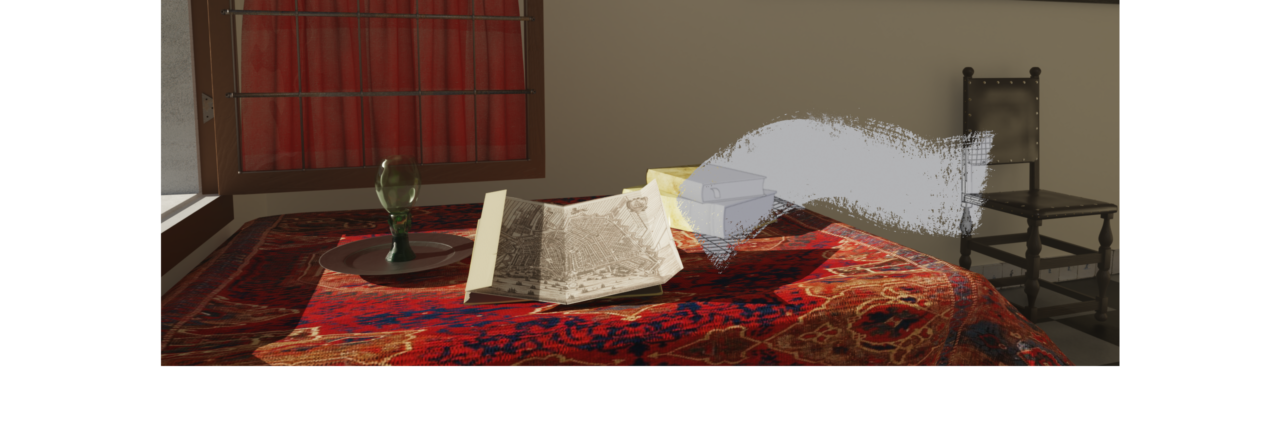

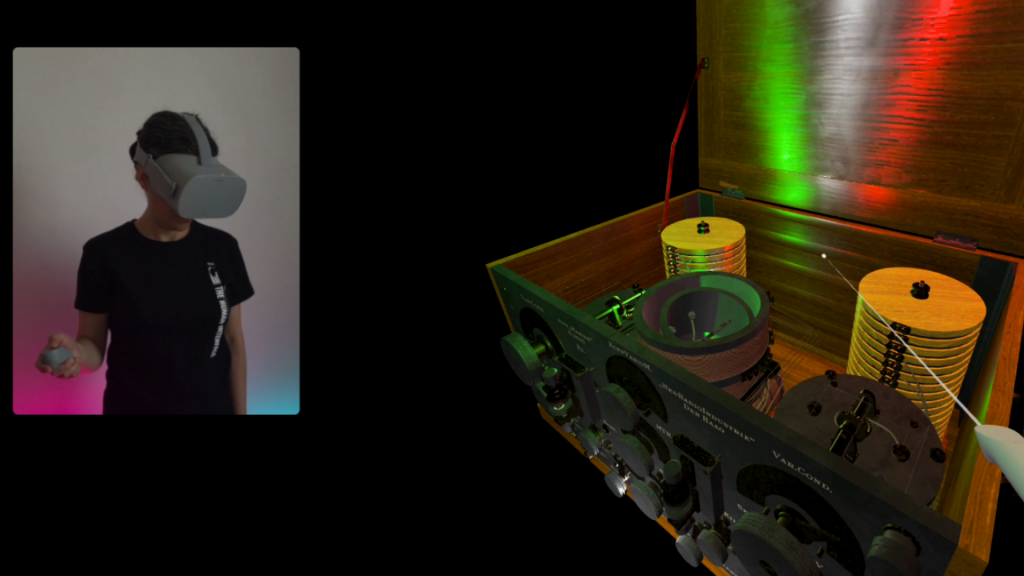

- The Experiential interface makes it possible to experience historical spaces intuitively, by e.g. utilizing Virtual Reality and the motion sensors of mobile phones (see figure 2C & 2D). Casual users and researchers can “jump into” a 3D historical space, look around and interact with the objects around them, in more immersive [8]. Experiential views have been explored in the context of Chiara Piccoli’s 3D reconstructions of domestic interiors, but also during my embedded research at the Netherlands Institute for Sound & Vision.

Read more about analytical interfaces:

📖 Hugo Huurdeman, Chiara Piccoli (2021), 3D Reconstructions as Research Hubs (Open Archaeology journal)

Read more about experiential interfaces:

📖 Hugo Huurdeman, Jesse de Vos (2020). Visualizing a Radio of the Past using Technology of the Future (blogpost, Neth. Inst. for Sound & Vision, R&D blog, with link to early 3D demo)

Integrating Linked Data

Another key differentiation point between the Virtual Interiors research environment and other 3D frameworks [9] is the pivotal role of Linked Data [10] in enriching our content. Linked Data provides a common approach to more easily interconnect data, and has also been used in the context of (digital) history [11]. More scarcely, however, it has been applied to the context of 3D reconstructions [12].

For the Virtual Interiors 3D reconstructions, we use Linked Data to dynamically integrate biographical information from Ecartico, but also additional (and visual) resources pertaining the reconstructions at hand. For instance, selecting Quellinus’ portraits in the entrance hall of the reconstructed house of Pieter de Graeff and Jacoba Bicker reveals a gallery of works by the same creator, and works of a similar type (from Adamlink and Wikidata). Linked Data can have added value for these kinds of interfaces, although the involved SPARQL [13] queries need to be designed carefully, and it is very important to clearly display provenance.

The connection between Linked Data and both experiential and analytical interfaces is also illustrated by Figure 5 and 6. The analytical interface shows biographies, links and galleries based on Linked Data in the sidebar, while the experiential interface displays image galleries via a spatial projection surrounding and immersing the user.

Read more:

📖 Hugo Huurdeman, Chiara Piccoli, Leon van Wissen (2021), Linked Data in a 3D Context (DH Benelux 2021).

Analytical searching

Another important element of our research environment demonstrator is the ability to do anlaytical searching. As indicated in [6], 3D scenes and reconstructions can potentially consists of a vast number of objects and details, which may be hard to explore due to issues in navigating 3D spaces. Using the demonstrator’s approach, it is possible to browse a textual list of objects in the scene, but also to highlight singular as well as categories of objects — while keeping the context of the objects surrounding it. For instance, a user might want to see all furniture in the the entrance hall of Pieter de Graeff’s and Jacoba Bicker’s house (Figure 5). These elements are highlighted, while the surrounding objects are still (dimly) kept visible.

Figure 5: Analytical search example (“furniture”)

4. Evaluation

As mentioned, the design and creation of the research environment took place in a co-design setting with Chiara Piccoli, but also involved frequent feedback from the other team members. Besides this internal feedback, also external feedback is of key importance to resolve usability and system issues. A more formal summative study of the analytical desktop prototype, combined with Chiara’s 3D reconstructions and research about Pieter de Graeff’s entrance hall, was conducted in June 2021 (6 participants).

Participants in the study filled out the System Usability Scale (SUS) questionnaire, which measures usability. The resulting SUS score was 77,9. This means that the usability is clearly above average, with only a need for minor improvements.

The majority of minor issues coming to light in the study could be fixed in the subsequent weeks, which included:

- Navigation issues. The familiarity with 3D environments of the participants influenced how easy it was to navigate the scene. We resolved this by providing more explicit instructions, and giving a higher prominence of a guided tour which informs about the possibilities of the 3D environment and viewer.

- Usability issues — these issues could be resolved rather easily, and were for instance related to the selection and deselection of objects in the scene, the textual labels of features and buttons.

- Technical issues and bugs — the majority of these smaller issues could also be fixed. More substantial issues, such as a lower performance on old computers could be partially alleviated by adding settings for simplified display and custom framerates.

5. Preservation strategy

Generally, cutting-edge 3D technologies are of an unstable nature. Software and platforms continuously evolve, often leaving behind support for prior technologies. This might render apps from the past non-functional [14, 15].

To mitigate this issues, the following approach was taken in Virtual Interiors:

- to use only open tools for creating the 3D models (e.g. Blender),

- to utilize open file formats (e.g. CSV, GLTF), and

- to use open technologies for creating our research environment (e.g. BabylonJS).

A key component is the open-source 3D game engine for the web named BabylonJS. We chose a web technology to provide us with the flexibility to support various platforms (ranging from mobile to desktop) and to be able to use more immersive modalities such as VR via the emerging WebXR standard.

In our turn, we are also publishing the underlying data and tools via an open-source tools license as well, and make these accessible in Zenodo and DANS Easy by the end of the project. Documentation of process, demos and code is also a key focus, augmented by screencasts and screenshots of the demonstrator at different points in time.

6. Conclusion

Despite the end of my tenure at Virtual Interiors, the developments do not end here. Additional 3D reconstructions will be integrated into the research environment. Moreover, the 3D research environment will be refactored and modularized by Saan Rashid, who recently started as a software engineer in Virtual Interiors.

This blogpost discussed the creation of a demonstrator for a 3D research environment. I have outlined the challenges, our approach to tackle them, but also the key importance of evaluation when creating these kinds of novel interfaces. I highlighted some of the numerous exciting opportunities to use 3D reconstructions in a research context, ultimately providing a form of “analytic immersion”.

▶ Try out the experimental prototype (desktop version) with the entrance hall of the 17th-C house of Pieter de Graeff & Jacoba Bicker, reconstructed in 3D by Chiara Piccoli (prototype version: August 2021).

Footnotes*

- Gane & Beer (2008)

- see e.g. Chittaro & Ieronutti (2004); LaViola et al (2017); Jerald (2016)

- See e.g. Jacko (2012); Lewis (2012); Mayhew (1999)

- See for instance Champion (2019); Wood et al (2019)

- See e.g. https://www.interaction-design.org/literature/topics/design-thinking

- See Huurdeman & Piccoli (2020) and this summary blogpost.

- See Shneiderman (2002).

- E.g. Dede (2009), Immersive Interfaces for Engagement and Learning.

- See e.g. the survey of features in current 3D frameworks by Champion & Ramahan (2020).

- See e.g. Antoniou & Van Harmelen (2008)

- see e.g. De Boer et al. (2016), Linked Data for Digital History; Meroño-Peñuela et al. (2020), Ontologies in CLARIAH; Zamborlini et al. (2017), Toward a Core Conceptual Model for (Im)material Cultural Heritage.

- As e.g. done by Kuroczyński et al. (2016)

- SPARQL is a query language commonly used for Linked Data repositories

- See e.g. Delve et al., 2012, The Preservation of Complex Objects—Volume 1; Lischer-Katz et al., 2017, Supporting Virtual Reality and 3D in Academic Libraries. Also, projects such as Pure3D are looking at infrastructures for 3D visualizations.

- More about this topic will be discussed in Huurdeman, Posthumus, Van den Heuvel, Beyond Dynamic Drawings: Restoring and Re-using Interactive 3D Visualizations (in preparation).

- Huurdeman & Piccoli (2021), 3D Reconstructions as Research Hubs

Abbreviated citations correspond with Huurdeman & Piccoli (2021), 3D Reconstructions as Research Hubs, full details in paper’s reference list.

1 Pingback